tg-me.com/echoinside/1875

Last Update:

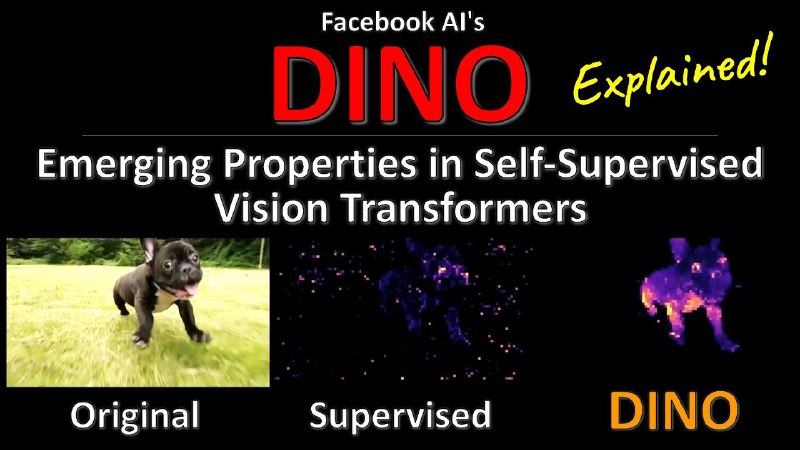

Emerging Properties in Self-Supervised Vision Transformers (DINO)

[Facebook AI Research, Inria, Sorbonne University]

(probably everyone already noticed)

* pdf, abs

* github

* blog

* YC review

tldr; DINO — self-distillation with no labels.

Non-contrastive SSL which looks like BYOL with some tweaks: local & global image crops sent to the student network, while only global crops sent to the teacher (multi-crop thing); cross entropy loss between teacher & student representations. Tested with resnet and vision_transformers, achieving better results with the latter. Works extremely well as a feature extractor for knn and simple linear models. It is also shown, that ViTs extract impressive class-specific segmentation maps in this unsupervised setting, which look much better than in supervised setting.

Also, batch size matters, and multi-crop matters. For example, the performance is 72.5% after 46 hours of training without multi-crop (i.e. 2×224^2) while DINO in 2×224^2+10×96^2 crop setting reaches 74.6% in 24 hours only. Memory usage is 15,4G vs 9.3G. So, the best result of 76.1 top1 accuracy is achieved using 16 GPUs for 3 days. And it is still better computational result than in the previous unsupervised works.

It is possible to use this method with small batches without multi-crop, but this setup wasn't studied well. Results with the smaller batch sizes (bs = 128) are slightly below our default training setup of bs = 1024, and would certainly require to re-tune hyperparameters like the momentum rates for example. We have explored training a model with a batch size of 8, reaching 35.2% after 50 epochs, showing the potential for training large models that barely fit an image per GPU.See images in comments.

#self_supervised

BY echoinside

Share with your friend now:

tg-me.com/echoinside/1875